Someone on the NICAR-L listserv asked for advice on the best Python libraries for web scraping. My advice below includes what I did for last spring’s Computational Journalism class, specifically, the Search-Script-Scrape project, which involved 101-web-scraping exercises in Python.

Python-based real world web scraping exercises. Contribute to anshu686/WebScraping development by creating an account on GitHub. Web Scraping with Python: BeautifulSoup, Requests & Selenium. With the help of this course you can Web Scraping and Crawling with Python: Beautiful Soup, Requests & Selenium. This course was created by GoTrained Academy & Waqar Ahmed. Python Web Scraping Tutorials What Is Web Scraping? Web scraping is about downloading structured data from the web, selecting some of that data, and passing along what you selected to another process. In this section, you will learn. About how to store scraped data in databases; how to process HTML documents and HTTP requests.

See the repo here: https://github.com/compjour/search-script-scrape

Best Python libraries for web scraping

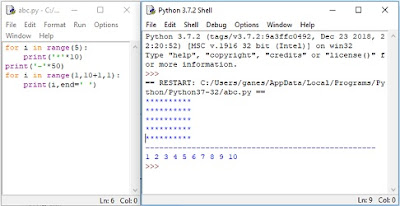

For the remainder of this post, I assume you’re using Python 3.x, though the code examples will be virtually the same for 2.x. For my class last year, I had everyone install the Anaconda Python distribution, which comes with all the libraries needed to complete the Search-Script-Scrape exercises, including the ones mentioned specifically below:

The best package for general web requests, such as downloading a file or submitting a POST request to a form, is the simply-named requests library(“HTTP for Humans”).

Here’s an overly verbose example:

The requests library even does JSON parsing if you use it to fetch JSON files. Here’s an example with the Google Geocoding API:

For the parsing of HTML and XML, Beautiful Soup 4 seems to be the most frequently recommended. I never got around to using it because it was malfunctioning on my particular installation of Anaconda on OS X.

But I’ve found lxml to be perfectly fine. I believe both lxml and bs4 have similar capabilities – you can even specify lxml to be the parser for bs4. I think bs4 might have a friendlier syntax, but again, I don’t know, as I’ve gotten by with lxml just fine:

The standard urllib package also has a lot of useful utilities – I frequently use the methods from urllib.parse. Python 2 also has urllib but the methods are arranged differently.

Here’s an example of using the urljoin method to resolve the relative links on the California state data for high school test scores. The use of os.path.basename is simply for saving the each spreadsheet to your local hard drive:

And that’s about all you need for the majority of web-scraping work – at least the part that involves reading HTML and downloading files.

Examples of sites to scrape

The 101 scraping exercises didn’t go so great, as I didn’t give enough specifics about what the exact answers should be (e.g. round the numbers? Use complete sentences?) or even where the data files actually were – as it so happens, not everyone Googles things the same way I do. And I should’ve made them do it on a weekly basis, rather than waiting till the end of the quarter to try to cram them in before finals week.

The Github repo lists each exercise with the solution code, the relevant URL, and the number of lines in the solution code.

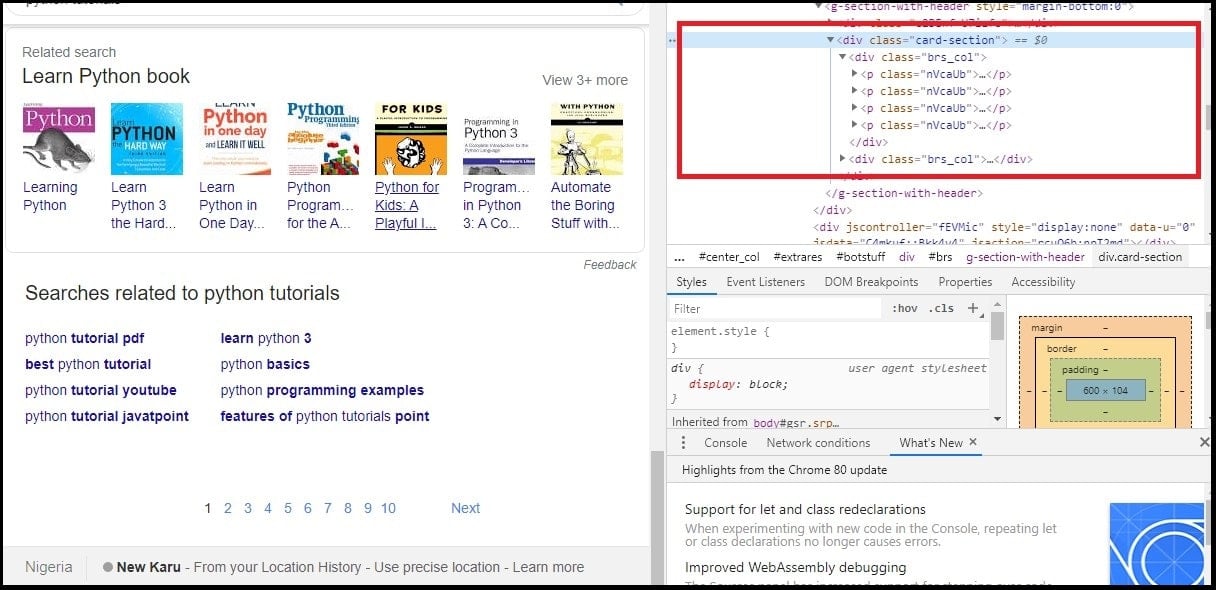

The exercises run the gamut of simple parsing of static HTML, to inspecting AJAX-heavy sites in which knowledge of the network panel is required to discover the JSON files to grab. In many of these exercises, the HTML-parsing is the trivial part – just a few lines to parse the HTML to dynamically find the URL for the zip or Excel file to download (via requests)…and then 40 to 50 lines of unzipping/reading/filtering to get the answer. That part is beyond what typically considered “web-scraping” and falls more into “data wrangling”.

I didn’t sort the exercises on the list by difficulty, and many of the solutions are not particulary great code. Sometimes I wrote the solution as if I were teaching it to a beginner. But other times I solved the problem using the style in the most randomly bizarre way relative to how I would normally solve it – hey, writing 100+ scrapers gets boring.

But here are a few representative exercises with some explanation:

1. Number of datasets currently listed on data.gov

I think data.gov actually has an API, but this script relies on finding the easiest tag to grab from the front page and extracting the text, i.e. the 186,569 from the text string, '186,569 datasets found'. This is obviously not a very robust script, as it will break when data.gov is redesigned. But it serves as a quick and easy HTML-parsing example.

29. Number of days until Texas’s next scheduled execution

Texas’s death penalty site is probably one of the best places to practice web scraping, as the HTML is pretty straightforward on the main landing pages (there are several, for scheduled and past executions, and current inmate roster), which have enough interesting tabular data to collect. But you can make it more complex by traversing the links to collect inmate data, mugshots, and final words. This script just finds the first person on the scheduled list and does some math to print the number of days until the execution (I probably made the datetime handling more convoluted than it needs to be in the provided solution)

3. The number of people who visited a U.S. government website using Internet Explorer 6.0 in the last 90 days

The analytics.usa.gov site is a great place to practice AJAX-data scraping. It’s a very simple and robust site, but either you are aware of AJAX and know how to use the network panel (and in this case, locate ie.json, or you will have no clue how to scrape even a single number on this webpage. I think the difference between static HTML and AJAX sites is one of the tougher things to teach novices. But they pretty much have to learn the difference given how many of today’s websites use both static and dynamically-rendered pages.

6. From 2010 to 2013, the change in median cost of health, dental, and vision coverage for California city employees

There’s actually no HTML parsing if you assume the URLs for the data files can be hard coded. So besides the nominal use of the requests library, this ends up being a data-wrangling exercise: download two specific zip files, unzip them, read the CSV files, filter the dictionaries, then do some math.

90. The currently serving U.S. congressmember with the most Twitter followers

Another example with no HTML parsing, but probably the most complicated example. You have to download and parse Sunlight Foundation’s CSV of Congressmember data to get all the Twitter usernames. Then authenticate with Twitter’s API, then perform mulitple batch lookups to get the data for all 500+ of the Congressional Twitter usernames. Then join the sorted result with the actual Congressmember identity. I probably shouldn’t have assigned this one.

HTML is not necessary

I included no-HTML exercises because there are plenty of data programming exercises that don’t have to deal with the specific nitty-gritty of the Web, such as understanding HTTP and/or HTML. It’s not just that a lot of public data has moved to JSON (e.g. the FEC API) – but that much of the best public data is found in bulk CSV and database files. These files can be programmatically fetched with simple usage of the requests library.

It’s not that parsing HTML isn’t a whole boatload of fun – and being able to do so is a useful skill if you want to build websites. But I believe novices have more than enough to learn from in sorting/filtering dictionaries and lists without worrying about learning how a website works.

Besides analytics.usa.gov, the data.usajobs.gov API, which lists federal job openings, is a great one to explore, because its data structure is simple and the site is robust. Here’s a Python exercise with the USAJobs API; and here’s one in Bash.

There’s also the Google Maps geocoding API, which can be hit up for a bit before you run into rate limits, and you get the bonus of teaching geocoding concepts. The NYTimes API requires creating an account, but you not only get good APIs for some political data, but for content data (i.e. articles, bestselling books) that is interesting fodder for journalism-related analysis.

But if you want to scrape HTML, then the Texas death penalty pages are the way to go, because of the simplicity of the HTML and the numerous ways you can traverse the pages and collect interesting data points. Besides the previously mentioned Texas Python scraping exercise, here’s one for Florida’s list of executions. And here’s a Bash exercise that scrapes data from Texas, Florida, and California and does a simple demographic analysis.

If you want more interesting public datasets – most of which require only a minimal of HTML-parsing to fetch – check out the list I talked about in last week’s info session on Stanford’s Computational Journalism Lab.

Hey data hackers! Looking for a rapid way to pull down unstructured data from the Web? Here’s a 5-minute analytics workout across two simple approaches to how to scrape the same set of real-world web data using either Excel or Python. All of this is done with 13 lines of Python code or one filter and 5 formulas in Excel.

Python Web Scraping Tools

All of the code and data for this post are available at GitHub here. Never scraped web data in Python before? No worries! The Jupyter notebook is written in an interactive, learning-by-doing style that anyone without knowledge of web scraping in Python through the process of understanding web data and writing the related code step by step. Stay tuned for a streaming video walkthrough of both approaches.

Huh? Why scrape web data?

If you’ve not found the need to scrape web data yet, it won’t be long … Much of the data you interact with daily on the web is not structured in a way that you could easily pull it down for analysis. Reading your morning feed of news and catch a great data table in the article? Unless the journalist links to machine-readable data, you’ll have to scrape it straight from the article itself! Looking to find the best deal across multiple shopping websites? Best believe that they’re not offering easy ways to download those data and compare! In this small instance, we’ll explore augmenting one’s Kindle library with Audible audiobooks.

Audiobooks whilst traveling …

When in transit during travel (ahh, travel … I remember that …) I listen to podcasts and audiobooks. I was, the other day, wondering what the total cost would be to add Audible audiobook versions of every Kindle book that I own, where they are available. The clever people at Amazon have anticipated just such a query, and offer the Audible Matchmaker tool, which scans your Kindle library and offers Audible versions of Kindle books you own.

Sadly, there is not an option to “buy all” or even the convenience function of a “total cost” calculation anywhere. “No worries,” I thought, “I’ll just knock this into a quick Excel spready and add it up myself.”

Web Scraping Exercises Python For Beginners

Part I: Web Scraping in Excel

Excel has become super friendly to non-spreadsheet data in the past years. To wit, I copied the entire page (after clicking through all of the “more” paging button until all available titles were shown on one page) and simply pasted this into a tab in the spready.

Removing all of the images left us with a column of a mishmash of text, only some of which is useful to our objective of calculating the total purchase price for all unowned Audible books. Filtering on the repeating “Audible Upgrade Price” text reduces the column down to the values we are after.

Perfect! All that remains is a bit of text processing to extract the prices as numbers we can sum. All of these steps are detailed in the accompanying spreadsheet and data package to this post.

$465.07 … not a horrible price for 60 audiobooks … just about $8 each. (As an Audible subscriber, each month’s book credit costs $15, so this is roughly half of that cost and not a bad deal …) NB – we could have used Excel’s cool Web Query capabilities to import the data from the website, though we’ll cover that separately as we cover scraping Web data requiring logins elsewhere.

I’m sure my Kindle library is like yours, in that it significantly expands with each passing week, so it occurred to me that this won’t be the last time I have this question. Let’s make this repeatable by coding a solution in Python.

Web Scraping Exercises Python Tutorial

Part II: Web Scraping in Python

The approach in Python is quite similar, conceptually, to the Excel-based approach.

- Pull the data from the Audible Matchmaker page

- Parse it into something mathematically useful & sum audiobook costs

Copy the data from the Audible Matchmaker page

The BeautifulSoup library in Python provides an easy interface to scraping Web data. (It’s actually quite a bit more useful than that, but let’s discuss that another time.) We simply load the BeautifulSoup class from the bs4 module, and use it to parse a request object made by calling the get() method of the requests module.

Parse the data into something mathematically useful

Similar to our approach in Excel, we’ll use the BeautifulSoup module to filter the page elements down to the price values we’re after. We do this in 3 steps:

- Find all of the span elements which contain the price of each Audible book

- Convert the data in the span elements to numbers

- Sum them up!

Donezo! See the accompanying Jupyter Notebook for a detailed walkthrough of this code.

There it is – scraping web data in 5 minutes using both Excel and Python! Stay tuned for a streamed video walkthrough of both approaches.